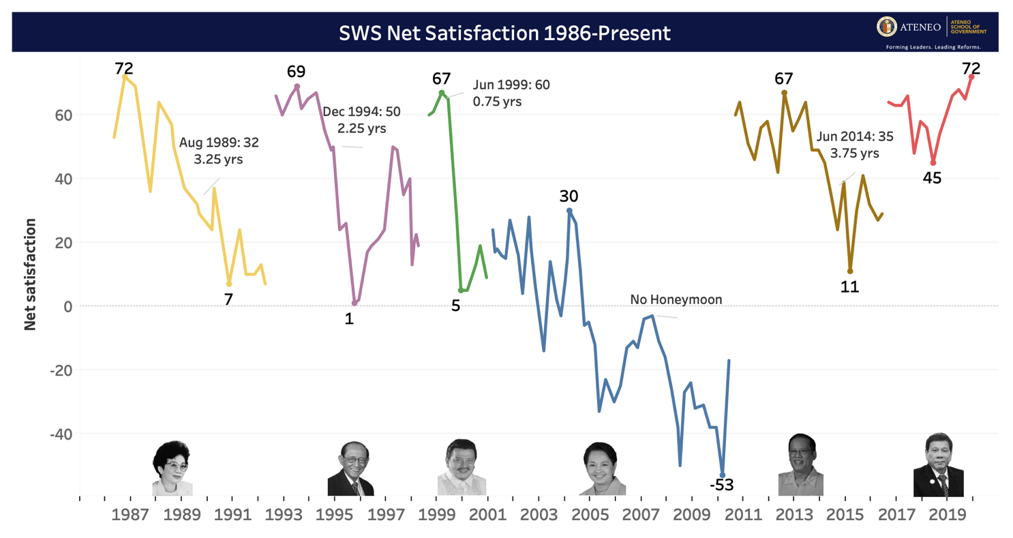

According to data released in January 2020, the Philippines’ President Rodrigo Duterte received a record-high satisfaction rating in the fourth quarter of 2019 — an “excellent” net satisfaction rating of +72, much higher than his +65 rating in September 2019, and eclipsing his previous high of +68 recorded in the second quarter of 2019. The survey results immediately generated much punditry on what this means for government policy — divisive and controversial as some of these policies may be.

And yet, based on our empirical examination of potential drivers of net presidential satisfaction, we found very little correlation between economic policy outcomes and presidential approval, despite economic outcomes like inflation and wages topping the concerns of citizens consistently across different surveys.

In fact, a snapshot of net presidential satisfaction results in the Philippines shows a boom-bust cycle, wherein presidents start out with high ratings, only to face a precipitous decline toward the end of their terms. This is not unlike the boom-bust pattern of a volatile stock market. In the figure below, the years refer to the period each president maintains their ratings above +30 which is considered as the “honeymoon period”. So far, this honeymoon has never reached 4 years. Duterte (at the time of this writing) comes closest to breaking that record.

Data source: Social Weather Stations media releases

If top policy issues are not linked to presidential approval, then what drives it? It might be useful to unpack some basic assumptions behind surveys. Access to information is one of the key factors thought to influence survey respondents’ perceptions. Put simply, citizens can more effectively assess their leaders if they have (at least) adequate access to facts and evidence and if they are also able to translate this information effectively toward rational and truthful responses to surveys. But there are several weaknesses faced by the “rational survey respondent.” We can better explain this by drawing an analogy with stock price bubbles.

We expect that stock prices merely reflect an assessment of the company’s future returns. This assumes, of course, that investors have perfect access to information on the company and different conditions in which it will eventually operate. The latter is far from the reality most investors face, as we routinely see over-hyped assets and subsequent dramatic corrections in value. A good example is Bitcoin – investors jumping onto the bandwagon pushed its value to increase thousands-fold, only to decline heavily once evidence of a bubble became clear.

Evidence of Herd Behavior

Herd behavior refers to the phenomenon in which the actual or perceived preferences and actions of a large group influence individual decisions and actions. Past research illustrated that people have a tendency to imitate other people’s choices not just to be accepted but to be safe. Another mechanism in herd behavior is the human receptivity to social norms and their dependency on others’ information obtained through social channels. In the Philippine context, this simply means that the perceptions of local communities have a deep influence on personal perceptions. Yet, this is a circular logic — if there is imperfect information, perceived strong support by the group could be self-reinforcing. That is the characteristic of a herd.

New literature on herd behavior has started to focus on the application of this concept in political phenomena, including presidential approval ratings. In the Philippine setting, there are various reasons why citizens (and their local leaders) join the bandwagon to support each president — gaining access to public services and currying favor from the state apparatus is not uncommon given the pervasive role of the Philippine government and the still large inequality in wealth and opportunities facing the population.

In our study, we exposed evidence of herd behavior — individual perceptions of the group’s satisfaction appeared to be linked to a respondent’s own answer on satisfaction. Hence, some of the assumptions about the “rational and well informed survey respondent” are probably just as vulnerable as the assumptions on the “rational and well informed investor.”

Like over-hyped stocks, we expect that presidential satisfaction tends to be sticky and high, initially, but with more information over time, this can deteriorate fairly quickly. The decline is often precipitous, since the “bubble” is not underpinned by fundamental factors eliciting support, and it could easily unwind. We find evidence to this effect in our study. (And the boom-bust cycle illustrated earlier provides further evidence to support this view.)

Fake News Linked to Presidential Perceptions?

Inadequate access to good quality information is one challenge; the proliferation of misinformation and fake news is a relatively new and important complicating factor in recent years due to improvements in access to technology (notably social media).

We found evidence that respondents who are more likely to support Duterte are also more likely to believe in the fake news that “drug overdose is one of the leading causes for death for the youth.” The war on drugs is one of the president’s flagship programs; and there has been extensive debate on the statistics used to back it. The end result is a confusing environment from which to base opinions.

Recent research in other countries has exposed possible perception bias, where certain groups of citizens can mistrust certain true stories and consider them false news, and also citizens can trust certain false stories (fake news) and consider them to be true. Hence, fake news types matter, and so do the inherent biases of different groups of citizens. In fact, there is strong evidence of more intense dissemination of misinformation and fake news in the run-up to both the U.S. 2016 presidential election and the UK 2019 general election. This underscores the importance of further research in this area, in order to better understand the implications of access to bad information and political behavior.

Understanding Surveys in a Muddy Environment

We face growing international evidence that the spread of misinformation, particularly on social media, can skew perceptions of some groups in society and shape their political behavior — including during elections, but also presumably on much simpler exercises such as surveys. To better appreciate and interpret what the survey results tell us, we need to embark on a broader multidisciplinary research agenda.

For instance, there is evidence that people with weaker cognitive abilities are less able to shake off their initial misperceptions and more vulnerable to misinformation. Meanwhile, new studies also show that repeating a false claim tends to produce an “illusion of truth effect.” Lab experiments have shown that “repetition increased the subjects’ perception of the truthfulness of false statements, even for statements they knew to be false.”

One should note the various strategies and methods that could now be deployed to shape public perceptions, sometimes in pernicious ways. The use of troll armies, micro-targeting, and deliberate spread of fake news are often not just undemocratic, they are also increasingly at odds with national security and social cohesion. Efforts by some groups (and even state actors) to confuse, misdirect, and divide society are some of the emerging threats.

Technology has helped to put propaganda machines on steroids — and the objective in some instances is not to drive home a particular narrative, but to flood society with confusing misinformation. The potential effect is that existing social divides become even more pronounced, and consensus and collective action ultimately become even more elusive.

What do surveys actually capture when all these issues contaminate the information environment? We need deeper analysis to better understand survey results. Mere punditry in the absence of evidence merely feeds the herd more bad hay.

Ronald Mendoza, Ph.D., is Dean of the Ateneo de Manila University, School of Government. Tristan Canare, Ph.D., is Deputy Director of the Rizalino Navarro Center for Competitiveness of the Asian Institute of Management. This article draws on a new study by the Ateneo Policy Center which can be downloaded here.